Number Classification ANN

UC Berkeley - Fall 2019

Overview

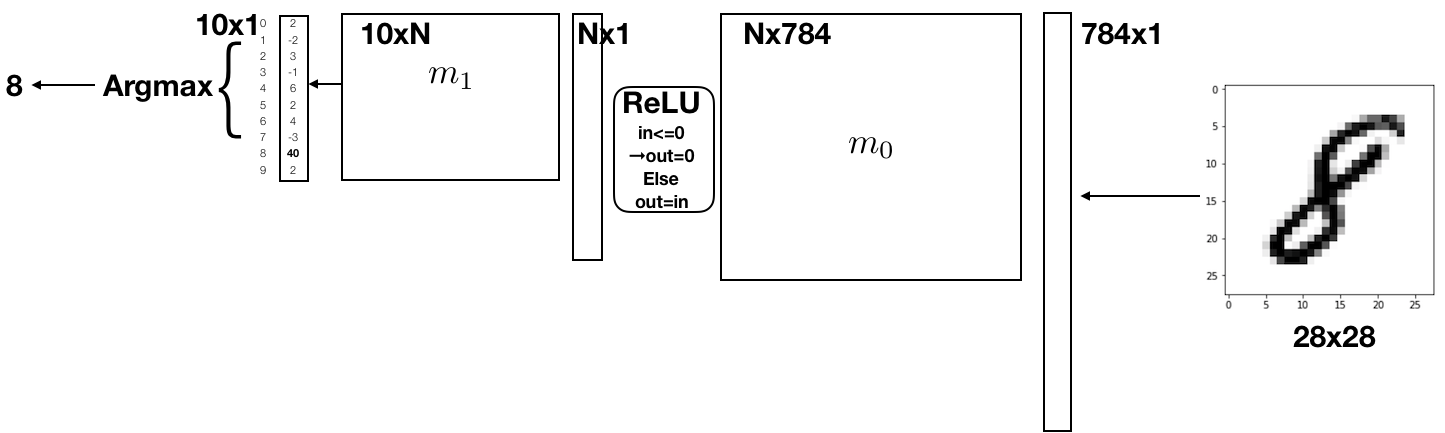

For my computer architecture class, I built an Artificial Neural Network (ANN) in the RISC-V assembly language that classifies handwritten digits to their actual number using the MNIST data set. The ANN utilizes matrix and vector operations and two nonlinear functions to make the classification. The network is pictured below:

The image of the number 8 on the right is a handwritten sample that is flattened into an array (like a list) of 784 pixels. The image input then goes through the network which consists of multiple matrix and vector multiplications, two training matrices m0 and m1 that manipulate the pixels of the image, and two non-linear functions ReLU and Argmax. ReLU turns negative numbers into zero while leaving positive numbers as is, and Argmax returns the index of an array of numbers with the greatest value. The Argmax function returns the network's classification of the image's true value.

The Code

I used the RISC-V assembly language, which is a low level language that consists only of basic functions, like logical operators, math functions, bit-wise operations, and memory storage functionalities, and has only 32 memory registers for storing program information like variables and memory addresses. I implemented general matrix and vector multiplication functions, the ReLU and Argmax non-linear functions, and reading and writing matrix functionalities. The main program put all of these functions together and consisted of 4 main parts: loading matrices, running mathematical layers, writing output, and calculating the classification/label. With very few places to store data due to RISC-V's limited storage registers, I had challenges with running out of storage space and overwriting my variables when trying to execute multiple functions at once. The key to the project was storing register values strategically in my program's memory before running new functions so that no variables would be lost. While the math of the ANN wasn't necessarily complex, the difficulty of the project came from working with limited built-in functions and very limited storage space. Completing this project made me realize that I take the ease of Python's syntax and memory safety for granted!

Example

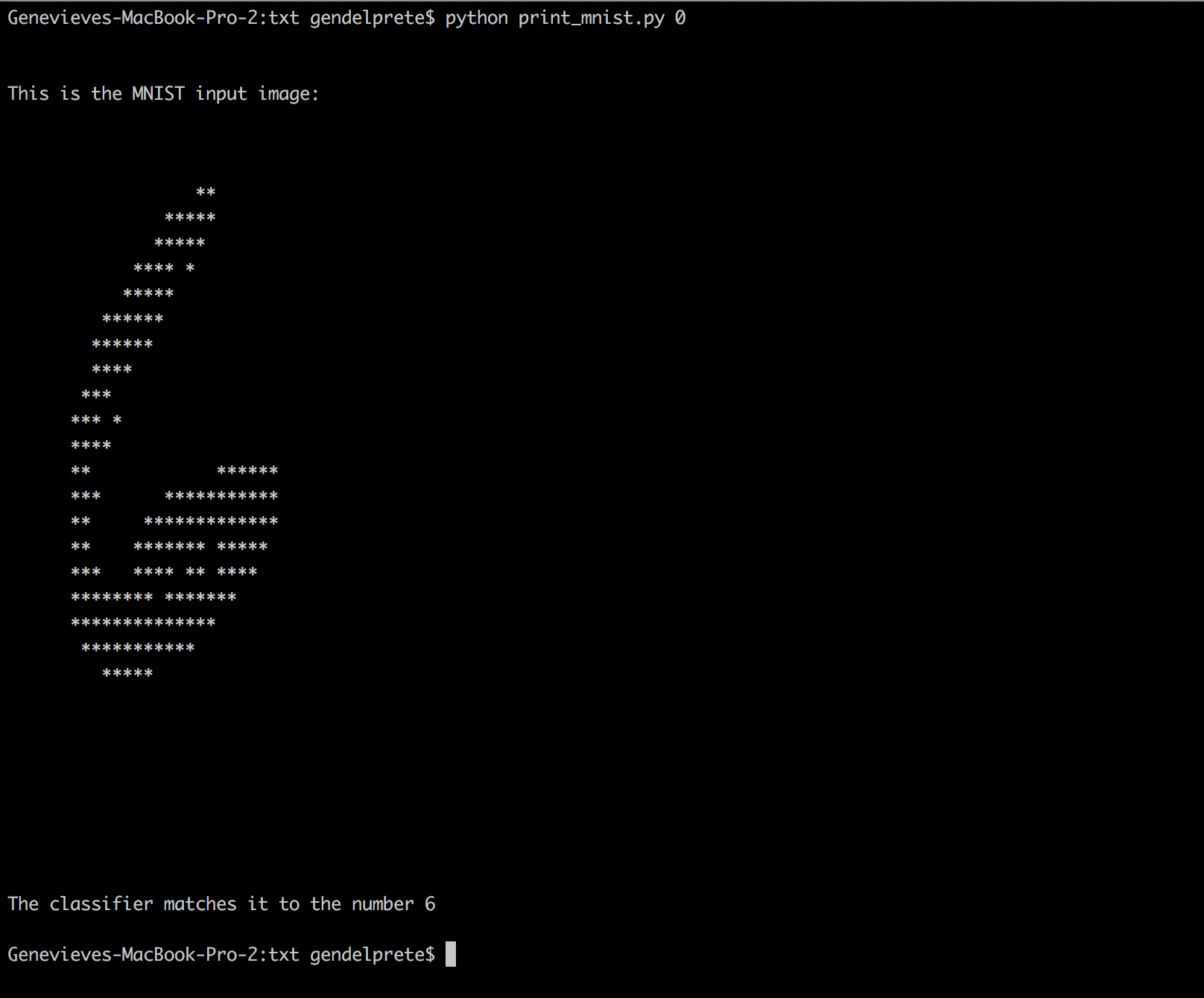

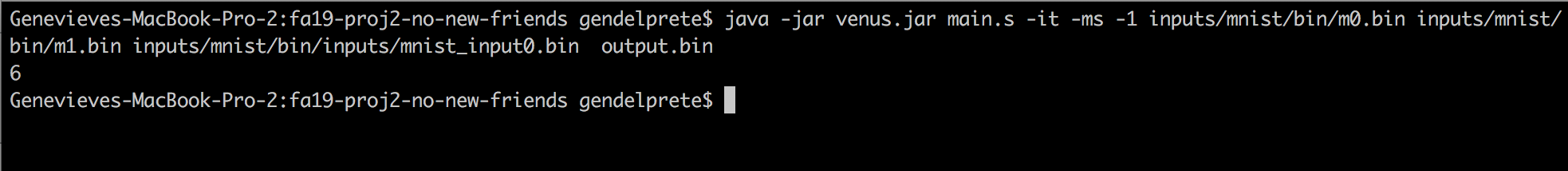

Above is an Ascii representation of the handwritten number image we will be classifying. Just from looking at the image, it should be classified as the number six! This image will be flattened into a 784 x 1 array of numbers and then sent through the ANN.

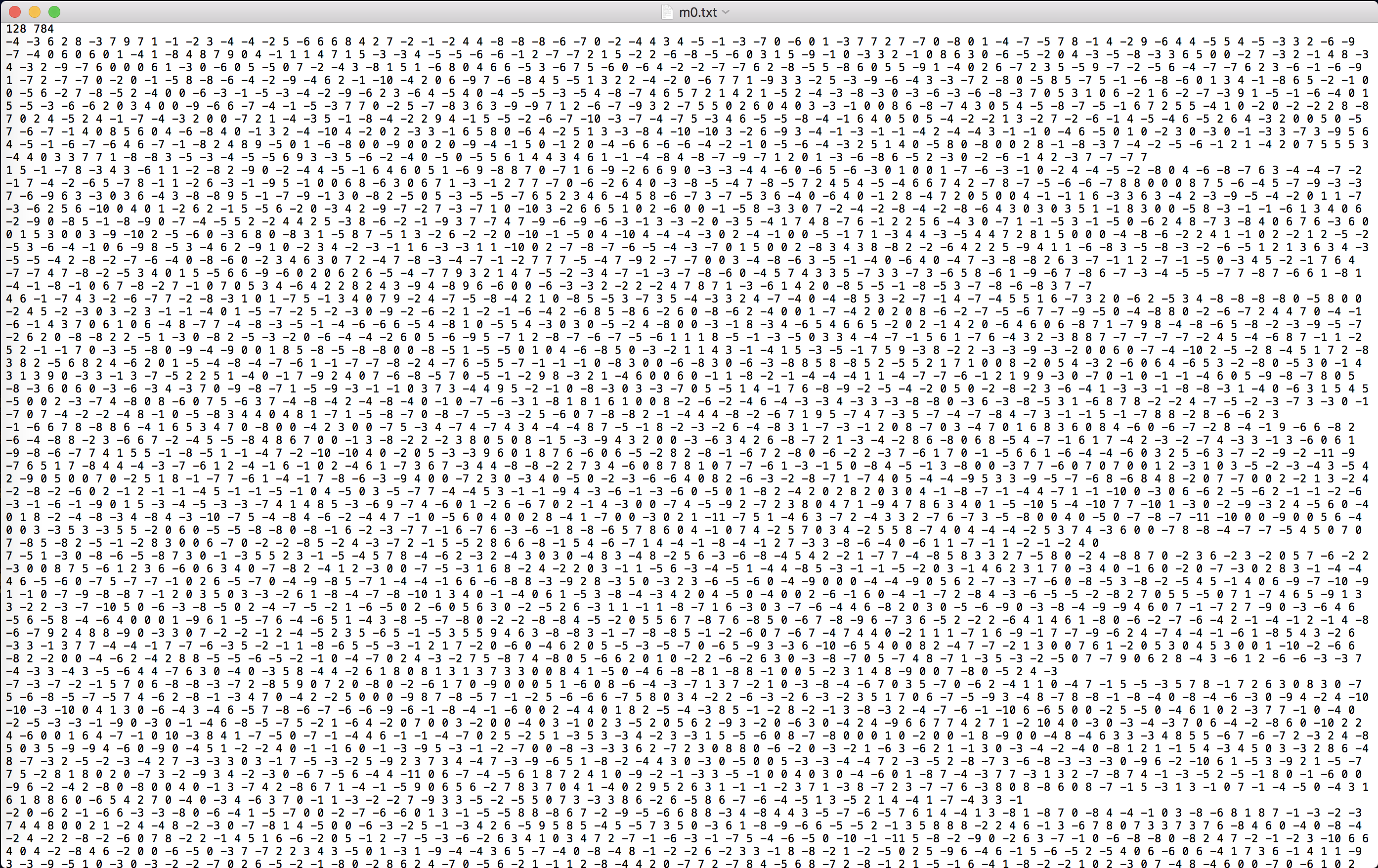

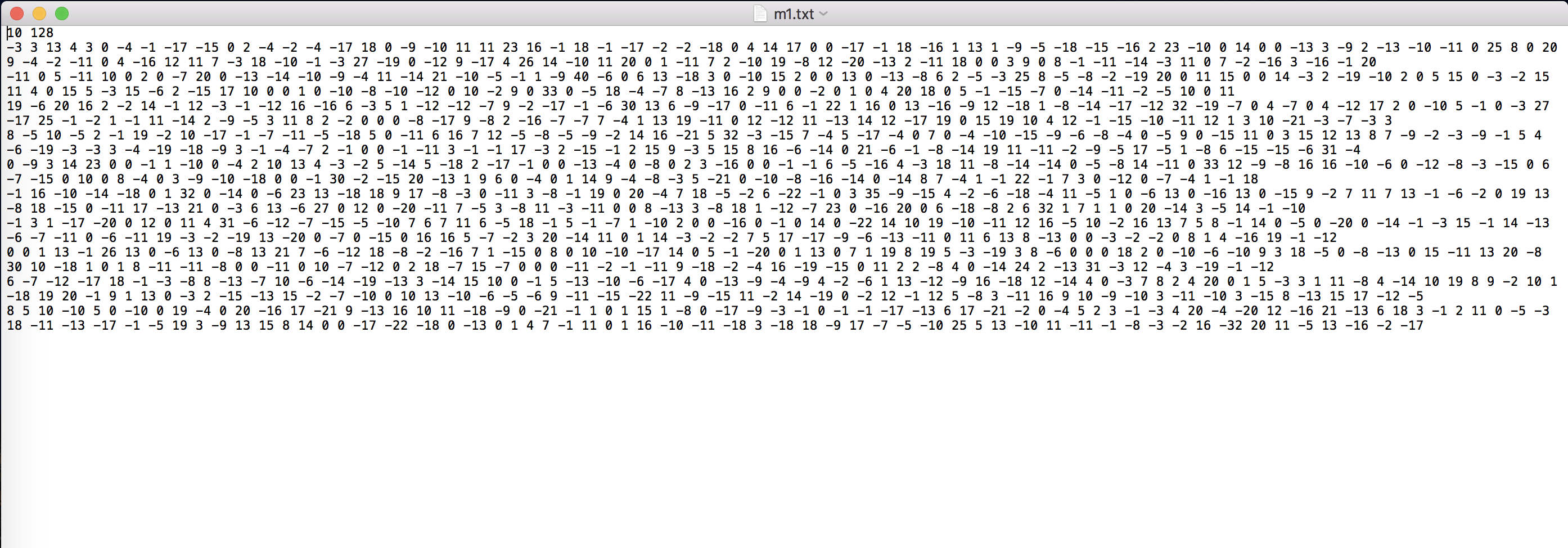

Above are the two training matrices, m0 and m1. m0 is a 128 x 784 matrix, and m1 is 10 x 128 matrix. The flattened input image is multiplied by these matrices before going through the nonlinear functions ReLU and Argmax in order to reach a classification. The training matrices are created by tuning the network using training images, so that the parameters of the network will have the most accurate correct classification rate when the network sees new images.

After running the main program, the network did all of the math and manipulations behind the scenes and classified the image correctly as the number six!

Languages Used